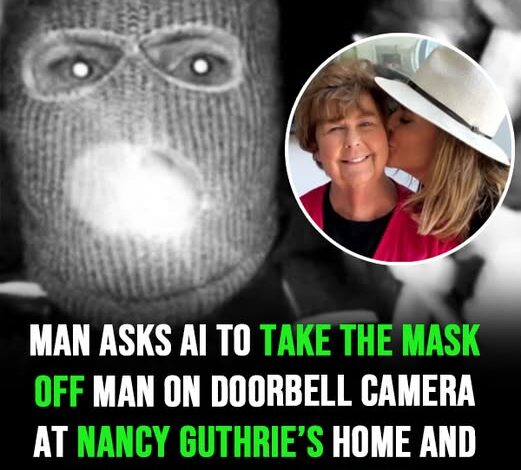

Man asks AI to take the mask off man on doorbell camera at Nancy Guthries home and gets eerie response!

The search for Nancy Guthrie, mother of Today co-anchor Savannah Guthrie, has now entered its second week, deepening concern among friends, viewers, and the broader public. As investigators continue to review evidence and appeal for information, online speculation has intensified—particularly after doorbell camera footage showing a masked individual outside her Arizona home was released to the public.

Authorities have confirmed that the footage is part of the ongoing investigation. The masked person’s identity remains unknown, and law enforcement has not publicly named any suspects. Officials have urged anyone with credible information to come forward, emphasizing that the investigation is active and that verified evidence—not online theories—will determine next steps.

Amid the uncertainty, a new and controversial development has emerged from outside official channels. A YouTuber known as Professor Nez published a video claiming to have used artificial intelligence to digitally “remove” the mask from the individual captured in the doorbell footage. The video quickly gained traction online, drawing both fascination and criticism.

In his presentation, Nez explained that he used generative AI software to create what he described as a possible reconstruction of the masked figure’s uncovered face. He stated that the resulting image resembled a man identified as Dominic Aaron Lee Evans, whom he described as a longtime friend and former bandmate of Tomasso Cioni, Annie Guthrie’s husband and Nancy Guthrie’s son-in-law.

“What you see on the screen right here is AI putting a mask on Dominic Aaron Lee Evans,” Nez said during the video, suggesting that the reconstructed face and the masked figure shared similarities.

He pointed to features such as facial hair, eyebrow shape, and eye spacing as evidence of what he called “eerily similar” characteristics. “That is very eerily similar… if you look at the facial hair on the chin there, you look at the eyes and the eyebrows, that is eerie,” he stated.

Nez said he used Grok AI to generate the reconstruction and described the output as “really, really accurate as far as what the individual may look like.” He added that modern AI tools are “absolutely out of this world” in their ability to fill in missing visual details.

However, it is critical to underscore that law enforcement agencies have not verified, endorsed, or commented on the AI-generated image. Authorities have not publicly identified any suspect connected to the case, nor have they confirmed any link between the masked individual in the footage and the person named in the video.

Experts in artificial intelligence and digital forensics have repeatedly cautioned against treating generative AI reconstructions as factual evidence. When working from limited or low-resolution footage—particularly when a subject’s face is partially obscured—AI systems rely heavily on pattern prediction rather than confirmed data. The resulting images can appear convincing while still being speculative.

Digital reconstruction tools, while increasingly sophisticated, do not function as forensic identification systems. They generate approximations based on probabilities, not certainties. In situations involving active investigations, prematurely associating an individual with a crime based on AI imagery can carry significant ethical and legal implications.

Online reaction to the YouTube video has been sharply divided. Some viewers described the side-by-side comparison as “uncanny” and compelling. Others warned that circulating AI-generated identifications without official backing risks spreading misinformation or unfairly implicating private individuals.

The case has also sparked broader conversation about the role of artificial intelligence in public investigations. As AI tools become more accessible, private citizens are increasingly using them to analyze footage, enhance images, or speculate about identities. While technological curiosity is understandable, experts caution that such efforts can interfere with investigations or distort public perception.

Law enforcement agencies typically rely on trained forensic analysts, corroborated evidence, and controlled investigative methods. Unlike AI-generated imagery shared online, official identifications are subject to strict evidentiary standards designed to protect both victims and suspects.

In the current case, authorities have reiterated that the investigation remains ongoing. Beyond the original doorbell footage, no additional identifying details have been publicly released. Police continue to review evidence and request that anyone with verified information contact them directly.

For Nancy Guthrie’s family, the ongoing search has been marked by uncertainty and emotional strain. Public support has poured in from viewers, colleagues, and members of the community. Savannah Guthrie has not publicly commented in detail, but expressions of solidarity have appeared across social media platforms.

The involvement of AI in the public narrative highlights a growing tension between technological possibility and responsible use. While generative tools can create realistic imagery in seconds, realism does not equal truth. In cases involving missing persons or potential criminal activity, accuracy and caution are paramount.

Legal analysts note that misidentification—particularly when amplified online—can cause reputational harm and complicate investigative efforts. For that reason, they stress the importance of relying on official updates rather than speculative reconstructions.

The rapid spread of the YouTube video demonstrates how quickly digital content can influence public conversation. Within hours of publication, clips and screenshots circulated widely, prompting debate not only about the case itself but also about the ethics of crowd-sourced investigation using AI.

As authorities continue their work, they have emphasized patience and restraint. Identifying individuals from partial footage is a complex process requiring corroboration across multiple evidence streams. Public speculation, while understandable, does not substitute for investigative procedure.

For now, the masked individual seen in the footage remains unidentified in official records. The AI-generated image introduced by the YouTuber exists solely as a speculative interpretation, unsupported by law enforcement confirmation.

The situation underscores a broader reality of the digital age: powerful tools can create persuasive visuals, but responsibility lies in how those tools are used and interpreted. Until investigators release verified findings, any AI-generated images should be regarded as hypothetical representations rather than established facts.

As the search continues, the priority remains the safe resolution of the case and the careful handling of information. In an era where technology can amplify both insight and error, measured judgment is more important than ever.